Ever found yourself staring at a beautifully designed page, wondering why it’s completely invisible on Google? It’s a classic SEO mystery. The page looks perfect to you and your users, but for some reason, it just won't rank.

The answer often comes down to a simple, frustrating truth: what you see isn't what Googlebot sees. This isn't just a minor technical detail; it's the root cause of countless indexing and ranking headaches. Learning how to see your page through Google's "eyes" is the first step to fixing what's broken.

Why Your Site Looks Different to Googlebot

It’s a scenario I’ve seen play out dozens of times. A client has a stunning page with rich, interactive content, yet it's either not indexed or fails to rank for its most important keywords. The culprit is almost always a disconnect between the browser and the crawler. Maybe critical content is rendered with JavaScript that Googlebot struggles with, or perhaps essential CSS files are accidentally blocked.

By stepping into Googlebot's shoes, you can diagnose these hidden issues and uncover:

- Indexing problems: Find out exactly why certain pages never make it into the search results.

- Invisible content: See if important text, links, or images are missing from the crawled version of your page.

- Blocked resources: Identify if blocked CSS or JavaScript files are preventing Google from rendering your page correctly.

The Big Shift to Mobile-First Rendering

The game changed completely when Google moved to mobile-first indexing back in 2016. Since then, Googlebot primarily crawls and analyzes the mobile version of your site to determine its rankings. The desktop version is now secondary.

The data backs this up. A 2022 analysis found the median number of words on a mobile page indexed by Googlebot was just 366, while desktop pages had 421—that's a 15% drop. You can dig into more of this data in the full 2022 SEO analysis.

This means if your mobile site is a stripped-down, "lite" version of your desktop experience, Google is likely missing a huge chunk of your content.

Common Causes of These Discrepancies

So, what causes these gaps? Often, it starts with the robots.txt file. This simple text file guides crawlers, and a single incorrect line can have disastrous consequences. For anyone new to this, it's worth taking the time for understanding the robots.txt file.

If you accidentally block the CSS and JavaScript files your site needs to load properly, Googlebot will only see a broken, garbled mess—not the slick interface your users enjoy.

Key Takeaway: A flawless user experience doesn't mean a thing if Googlebot can't see it. You have to actively check how your pages are rendered by the crawler to make sure your hard work isn't going to waste on content that’s effectively invisible.

This process confirms that Googlebot is finding your internal links and seeing your most valuable content. For an even deeper dive, a log file analysis is your best friend. It lets you see exactly how and when Googlebot interacts with your server, giving you the raw data behind its behavior. We have a full guide on the analysis of log files if you want to go down that rabbit hole.

Want to know exactly how Google sees your page? The best way is to go straight to the source. Google gives us a couple of fantastic tools that offer a direct window into its rendering process, cutting out all the guesswork.

The most powerful tool in your kit is the URL Inspection Tool, tucked away inside Google Search Console. This isn't a simulation. It shows you the real data Google has for your page, directly from its own index, covering everything from crawling to indexing.

Running a Live Test for an Up-to-the-Minute View

While looking at the indexed version is great for checking history, the real magic is the "Test Live URL" button. Clicking it sends Googlebot to crawl your page on the spot, giving you an immediate, real-time snapshot of how it renders right now.

This is a lifesaver for debugging. Imagine you just shipped a fix for some missing product images on a key e-commerce page. Instead of waiting days for Google to recrawl it, you can run a live test and confirm in minutes that Googlebot can now see and render those images perfectly.

After the test finishes, you get a treasure trove of diagnostic info:

- HTML: The raw HTML code that Googlebot fetched from your server.

- Screenshot: A visual render of what the page looks like to Googlebot on a mobile device.

- More Info: A detailed list of every resource the page tried to load (CSS, JavaScript, images, etc.) and whether it was successful.

That "More Info" tab is where I often find the smoking gun. A long list of "Blocked Resources" is a massive red flag. It usually means your robots.txt file is accidentally telling Googlebot not to access the very CSS or JavaScript files it needs to see your page correctly.

Comparing Google's Web Page Inspection Tools

To get the most out of Google's diagnostic suite, it helps to know which tool to use for which job. Here’s a quick breakdown of the URL Inspection Tool versus the public Mobile-Friendly Test.

While both tools use the same rendering technology, the URL Inspection Tool gives you the full story connected to your site's performance in search. The Mobile-Friendly test is more of a quick, focused check-up.

Making Sense of the Rendered View

The moment of truth comes when you compare the live test screenshot with what you see in your own browser. Is your main navigation missing? Is a huge chunk of body content just gone? This side-by-side comparison instantly highlights issues caused by client-side JavaScript or those pesky blocked resources.

I once worked on a JavaScript-heavy single-page application where the entire navigation menu was invisible in the screenshot. A quick look at the "More Info" tab revealed a critical JavaScript file was being blocked. Fixing a single line in

robots.txtmade the whole site structure pop back into view for Googlebot.

Another great trick is to compare the Rendered HTML from the tool with the "View Page Source" in your browser. The page source is what your server sends first, but the rendered HTML is the final product after JavaScript runs. If your most important content only shows up in that final rendered version, you're putting a lot of faith in Google's ability to execute everything flawlessly.

Mastering the URL Inspection Tool takes you from guessing to knowing. You can finally confirm your fixes work, diagnose why a page looks broken to Google, and be confident that what users see is what the search engine sees.

Simulating Googlebot Directly in Your Browser

Sometimes you just need a quick-and-dirty way to see what Googlebot sees. You don't want to fire up a full crawler or wait for Search Console to process a live test. For those moments, your browser’s own developer tools are an underrated powerhouse.

The trick is to change your browser’s user-agent string. Think of the user-agent as a digital name tag. Every time your browser requests a page, it announces itself: "Hi, I'm Chrome on a Mac," or "I'm Firefox on Windows." By swapping that name tag to say "Googlebot," you can often get the server to show you exactly what it would serve to the real crawler.

It's my go-to first step for investigating weird redirects or content that seems to magically disappear only for search engines.

How to Change Your User-Agent in Chrome

You don’t need any fancy extensions for this. Everything you need is already built right into Chrome’s Developer Tools.

- Right-click anywhere on the page and hit "Inspect" to open Developer Tools. You can also use the shortcut

Ctrl+Shift+I(Windows) orCmd+Option+I(Mac). - In the DevTools panel, find the three vertical dots in the top-right corner. Click them and go to More tools > Network conditions.

- A new "Network conditions" tab will pop up, usually at the bottom. Under "User agent," uncheck the box that says "Use browser default."

- Now, just open the dropdown menu and select "Googlebot Smartphone." The page will instantly reload with the new identity.

That's it. You're now browsing as Googlebot. Take a look around. Does any content vanish? Do you get sent to a different URL? These are classic red flags for user-agent-based cloaking.

Important Limitations to Understand

While this method is fantastic for a quick check, it's not a perfect simulation of Google's entire rendering process. It's crucial to know the limitations so you don't jump to the wrong conclusions.

Key Insight: Changing your user-agent tells the server you're Googlebot, but it doesn't change what your browser is. You are still using your local Chrome or Firefox engine, not Google’s sophisticated Web Rendering Service (WRS).

This is a critical distinction for a few reasons:

- JavaScript Execution: Your browser might handle JavaScript a bit differently than the specific version of Chrome that Googlebot uses for rendering.

- Resource Fetching: This local test won't account for IP-based blocking or other infrastructure rules that might prevent the actual Googlebot from accessing certain files.

- Geographic Location: Googlebot typically crawls from US-based IP addresses. If your site serves different content based on geography, your simulation from another country won't catch that.

At the end of the day, simulating Googlebot in your browser is a phenomenal diagnostic starting point. It's fast, free, and can instantly uncover blatant cloaking issues. But for the definitive word on how Google truly renders your page, always double-check your findings with the URL Inspection Tool in Google Search Console.

Scaling Your Analysis with SEO Crawlers

Google's own tools are fantastic for checking a handful of URLs. But what happens when you're staring down a 5,000-page e-commerce site or a massive enterprise blog? Checking pages one by one just isn't an option.

That's when SEO crawlers like Screaming Frog and Sitebulb become your best friends. These tools can crawl your entire website pretending to be Googlebot, letting you spot sitewide issues that are impossible to find manually.

Configuring Your Crawler to Act Like Googlebot

Getting the setup right is everything. By default, these crawlers announce themselves with their own user-agent. To get a true picture of what Google sees, you have to tell your crawler to identify as Googlebot.

It's usually a pretty simple tweak in the settings:

- Head over to the crawler’s configuration menu.

- Find the User-Agent setting.

- Switch it from the default to Googlebot Smartphone.

This one change tells your server, "Hey, I'm Google's mobile crawler." Your server will then serve up the exact content, redirects, or rules it would show the real Googlebot.

Don't Forget to Enable JavaScript Rendering

Just changing the user-agent isn't enough for most modern websites. So many sites rely on JavaScript to load everything from product details to the main navigation menu.

To really see your site through Google's eyes, you have to enable JavaScript rendering in your crawler. This tells the tool to not just download the initial HTML, but to actually execute all the scripts—just like Google's own Web Rendering Service (WRS) does.

Once the crawl is done, you can compare the raw HTML against the final, rendered HTML for every single page.

Pro Tip: This raw vs. rendered comparison is a goldmine for technical SEOs. If critical content like product descriptions or internal links only show up after rendering, it’s a big red flag. It means you're heavily reliant on client-side rendering, which can sometimes slow down indexing, especially on large sites.

This kind of scaled-up analysis is a cornerstone of any serious SEO effort. In fact, solid enterprise SEO strategies always start with making sure that basic crawlability and rendering are flawless across thousands of pages.

Using cURL for a Raw, Unfiltered Look

For a more surgical, no-nonsense approach, there's the command-line tool cURL. It’s more technical, for sure, but it gives you something no browser can: the raw, unfiltered HTML your server sends directly to Googlebot before a single line of code is rendered.

It's the perfect tool for sniffing out tricky cloaking issues or subtle server misconfigurations.

Just pop open your terminal and run a command specifying the Googlebot user-agent and the URL you want to hit.

curl -A "Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/W.X.Y.Z Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" https://www.yourwebsite.com/page

The wall of text that appears is exactly what Googlebot receives. It's the purest server response you can get.

Considering Googlebot scans over 300 million unique URLs a year, mastering these techniques ensures your site is ready for every single visit. You can learn more about Googlebot's immense scale and why preparing for its crawl is so critical.

So, you've confirmed Googlebot sees your page differently than a human user. Good. The diagnostic part is over; now it's time to fix things. This is where you translate those nerdy insights into actual ranking improvements.

Most of the time, these rendering screw-ups come down to a handful of classic mistakes.

Let's be honest, many of these issues are symptoms of a bigger problem. Getting the fundamentals right from the start by building a strong technical SEO foundation prevents most of this stuff from ever happening. But if you're already in the thick of it, the problem usually involves resources being blocked or delivered incorrectly.

A badly configured robots.txt file is probably the most common offender I see. Just one overly aggressive Disallow rule can lock Googlebot out of the very CSS and JavaScript files it needs to see your page properly. Without them, Google just gets a broken, skeletal version of your site.

Fixing Blocked Resources

To fix this, you need to get your hands dirty and audit your robots.txt file. Look for any directives that are blocking entire folders like /css/ or /js/. Often, the fix is as simple as deleting that one Disallow line, which immediately gives Googlebot the access it needs to render everything correctly.

Another classic mistake is hiding important content behind user interactions like a click or a hover. Googlebot doesn't click buttons or mouse over elements. If your key content only loads after one of these actions, it’s basically invisible to the search engine.

Key Takeaway: If your most important content isn't there when the page first loads, there's a good chance Googlebot will never find it. Make sure critical text and links are baked directly into the initial HTML.

Then there's the big one: accidental cloaking. This happens when your server is set up to show a "lite" version of a page to what it thinks are bots, while showing the full-featured version to humans. It's rarely malicious, but it's a serious problem. The best way to catch this is by regularly comparing your live page to what the URL Inspection Tool in Google Search Console shows you.

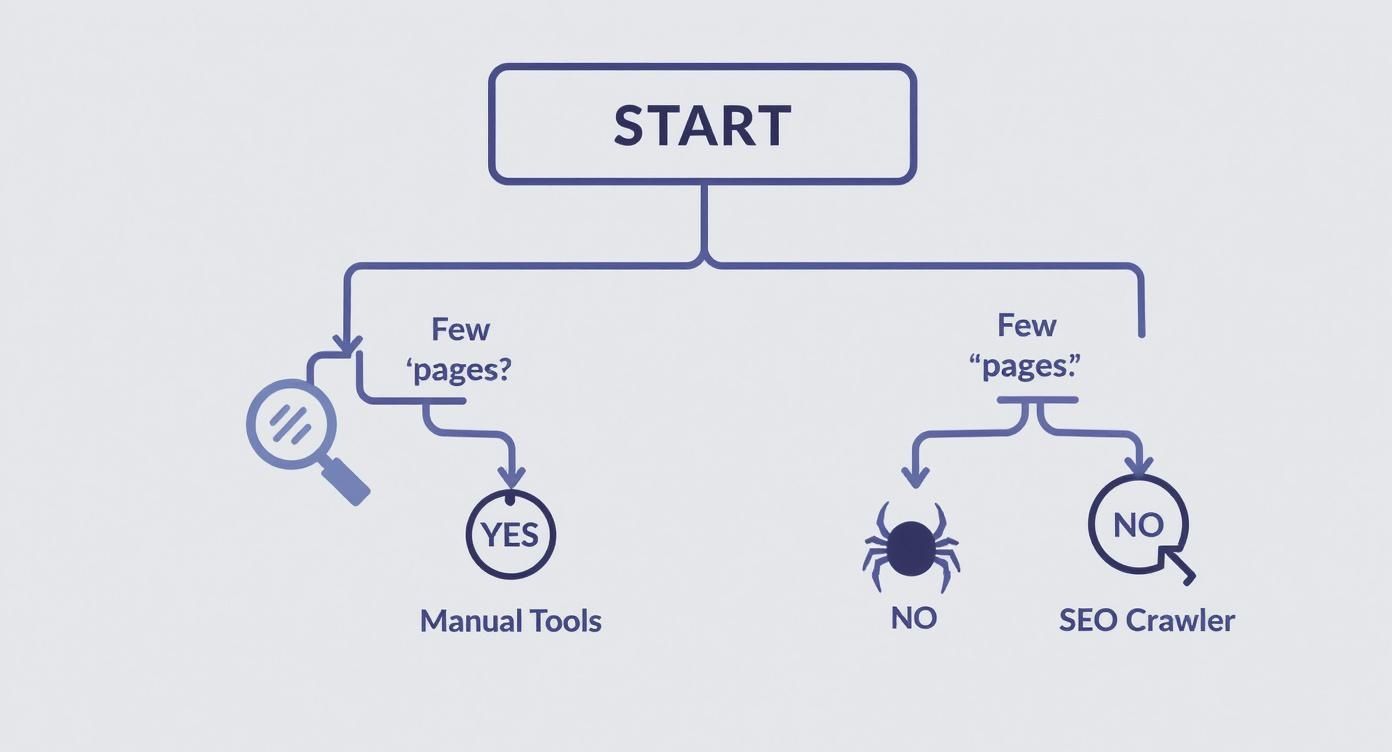

To help you choose the right tool for the job, this little decision tree breaks it down based on how many pages you need to check.

As the chart shows, if you’re just spot-checking a few URLs, manual tools are your best bet. For anything site-wide, you absolutely need a proper SEO crawler.

Auditing Crawler Efficiency

It's also worth understanding how Googlebot crawls. An analysis over a 14-day period showed that Googlebot crawled a site 2.6 times more often than AI crawlers combined, yet used about the same amount of bandwidth.

How? Googlebot’s requests are lean, averaging just 53 KB, while AI bots gobble up 134 KB per request. This tells you Googlebot is all about high-frequency, lightweight checks.

Finally, don't forget the basics. A clean, validated XML sitemap is like giving Google a clear, easy-to-follow map of your site. It helps Google find and prioritize your most important pages. If you're rusty on this, we've got a great guide to help you validate your XML sitemap.

By tackling these common problems one by one, you can close the gap between what your users see and what Googlebot actually indexes.

Common Questions About Viewing Your Site as Googlebot

As you start digging into how Googlebot sees your pages, you'll inevitably run into a few tricky questions. This isn't just about running a test; it's about knowing how to read the results and make the right call for your SEO strategy. Let's walk through a few of the most common ones I hear.

Is a Browser User-Agent Switch Really Accurate?

One of the most common shortcuts is to swap your browser's user-agent string to mimic Googlebot. It's fast, easy, and feels like you're getting a direct look. But is it 100% accurate?

The short answer is no.

Changing your user-agent is great for catching obvious red flags, like cloaking or user-agent-specific redirects. But it doesn't truly replicate Google's crawling environment. Your browser is still your browser—it has its own rendering engine, its own JavaScript quirks, and its own way of doing things. It's not the same as Google's Web Rendering Service (WRS), which is a specific, headless version of Chrome built for crawling.

The ground truth will always come from Google's own tools. For the most reliable view, trust the URL Inspection Tool. It uses the actual WRS to process your page, so there’s no guesswork involved. It’s a direct look, not a simulation.

Does a Clean Render Mean I Have No SEO Issues?

So, you ran your JavaScript-heavy page through the URL Inspection Tool's live test, and it looks perfect. High-five! But does that mean you're totally in the clear? Not so fast.

A clean render is a massive win, but it's not the whole story.

You still need to check the "Crawled page" details. Look for blocked resources or console errors that might not break the visual layout but are still tripping up the crawler. Also, think about your crawl budget. If every single page on your site requires heavy-duty JavaScript rendering, it eats up Google’s resources. For a large site, this can mean slower indexing for new or updated content, and that’s a big deal.

How Do I Spot Accidental Cloaking?

Accidental cloaking is one of those sneaky issues that can get you into serious trouble. It usually happens when a site tries to be clever by optimizing the experience based on a user's location, device, or cookie settings, but ends up showing Googlebot something completely different.

The best way to catch this is with a side-by-side comparison:

- First, run a live test in the URL Inspection Tool and grab the rendered HTML.

- Next, open the same page in an incognito browser window (this makes sure you're not logged in and don't have any specific cookies).

- Finally, compare the two versions.

If you see major differences in the main content, navigation, or product details, you might have an accidental cloaking problem on your hands. For a more technical check, using a command-line tool like cURL to fetch the raw HTML is another fantastic way to see exactly what your server is sending to the crawler before any scripts run.

At PimpMySaaS, we make sure your B2B SaaS brand is not only seen by Google but also cited in LLMs like ChatGPT. Our strategies turn complex technical SEO into real growth. Discover how we can elevate your online presence.